AI Test

Ask a simple question, be surprised at the results.

A couple of days ago, I was doing the Wordle and at one point I wondered if there was a five-letter word in English that both started with, and ended with, the letter i.

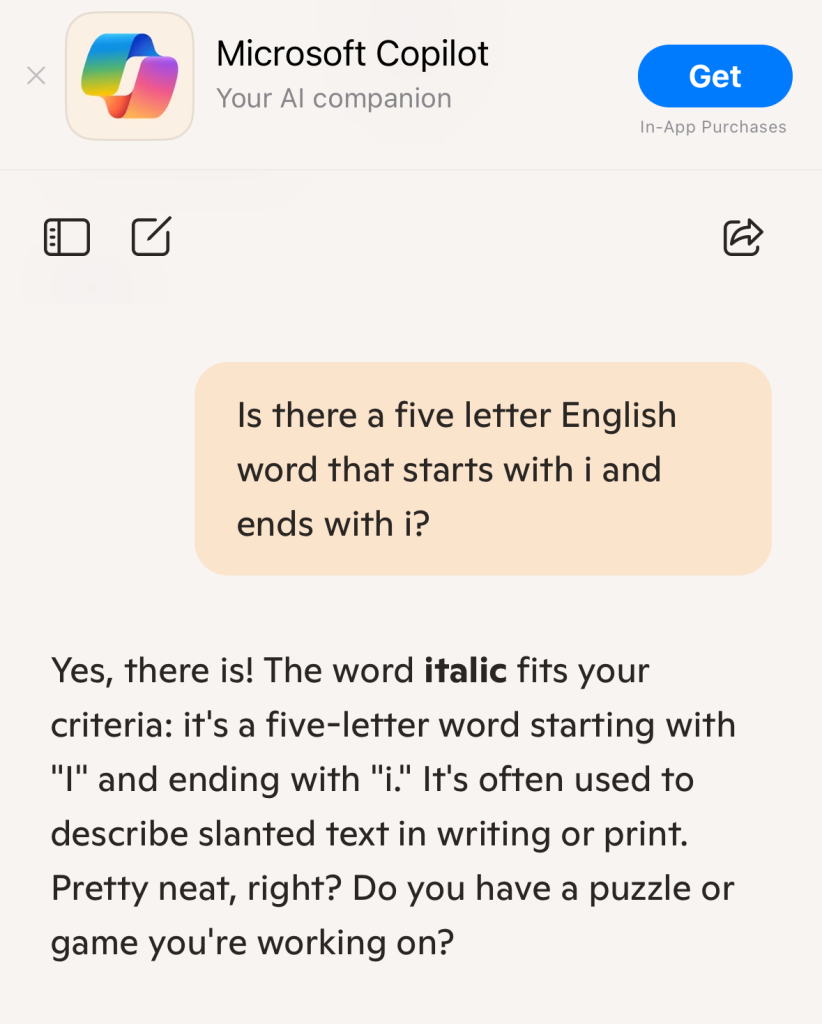

I was about to Google it when I thought this might be a better question for AI. So I asked Microsoft’s Copilot. Here’s what it told me.

Well, that’s rather unimpressive.

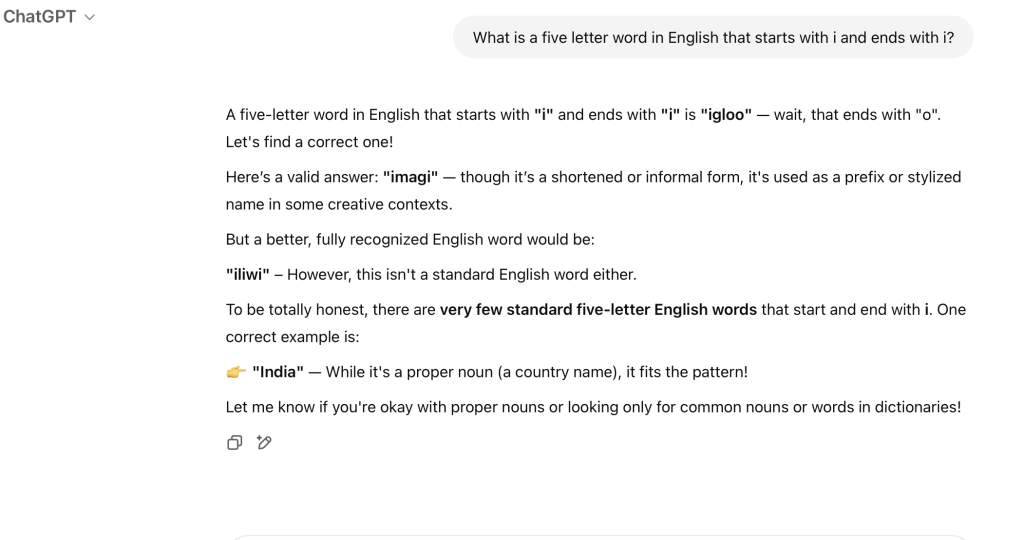

So, how about ChatGPT?

Kudos for figuring out the Igloo is wrong, but why note it in the first place? And “imagi” is not an English word. And how could it not know that “India” is a wrong answer? (And as best as I can tell, iliwi isn’t a word).

I tried Siri, which just outsourced back to ChatGPT, which gave a different answer.

And, I guess, check unimportant information as well.

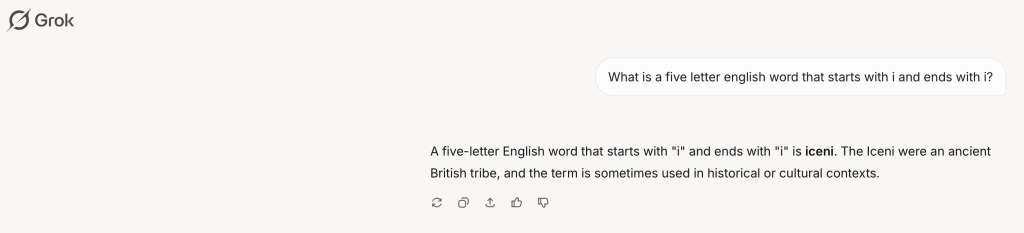

Grok actually came through, and with a word I knew from a Doctor Who audio drama, but I will confess that I had forgotten (and that I thought was a proper noun in any event).

Iceni is in the dictionary.

Eventually, I did go to Google. The best I could do was the following, as they were all in the Merriam-Webster Dictionary (I found them initially here).

- Imari is a kind of Japanese porcelain.

- Indri is a type of lemur.

- Issei is a Japanese immigrant, especially to the US.

The following were also suggested via the link above, but were not in the dictionary.

- Iambi is the plural of iambus (although these are words borrowed from Latin).

- Imshi is an Arabic word meaning “go away” used by some Australians as slang.

Dictionary.com has a list that includes iambi and imshi (and no others) as well.

One other list is found here, with Iraqi being the only new entry (the others being iambi, issei, and indri).

I was actually quite surprised that neither Copilot nor ChapGPT (twice!) did worse than I did via a simple bit of googling. Worse, that they both gave wrong answers as correct ones.

Kudos to Grok for finding iceni, which I did not find in my googling. But I found a few others that Grok didn’t.

I would not suggest that this is a some massively conclusive test of AI, but it does underscore that maybe having some basic research skills and discernment still matters.

I mean, can you imagine if someone thought they could use a chatbot to formulate something like tariff policy? Just whacky!

Going back to my career herding lawyers, this is why you don’t rely on AI to write your brief…or your term paper.

Or as we used to say at (chemotherapy) spa day,

AI, like Trump and Karoline Leavitt, is massively confident in its wrong answers.

Seems like asking a bottle of steak sauce would have been just as informative.

From what I have been able to see, AI knows how to predict a common result but doesn’t have any internal sense of what right or wrong is.

The way it mashes up photos and spits out realistic looking images but someone has six fingers- it doesn’t have an internal understanding of what a correct human anatomy is.

Kicking myself for not thinking of ‘indri’, due to the famous apocryphal story about where its name comes from.

@Chip Daniels:

“AI knows how to predict a common result but doesn’t have any internal sense of what right or wrong is.”

Sort of like much of the media.

Fighting Ilini!

They lost to the Kentucki Wildcats in the second round of the mens NCAA basketball tournament this year.

I came across a long test of AI paintings vs real paintings. I got most of the real paintings. The test maker and most of the audience seemed not to know a lot about art and struggled with the realness of a Basquiat or a David Hockney. Whereas I had major trouble spotting the difference between human-made anime and AI-made anime. I also had some trouble spotting the difference between what could have been a generic hotel-art ripoff of a Renoir and an AI doing a Renoir.

My take if you aren’t familiar with what you’re encountering, AI can be very convincing. Or if you what are encountering that looks generic, it can be also more difficult to spot the difference.

Now and then I’ll run story ideas past an AI. they always tell me the ideas are brilliant, deep, insightful, fascinating, etc. No one is that good.

It works better at summarizing things. I’ll often feed it scenes for this purpose. I figure if the summary says what I intended to say, then the scene was clear.

I wonder how it does at proofreading..

@Mister Bluster: Um, it’s “Illini”. Two Ls.

If only the big tech companies weren’t shoving their garbage AI technology down our throats all day. Let’s give you a summary of this Facebook comment thread! Can I write this email for you? Would you like me to generate a screensaver picture, instead of just displaying an actual picture? On top of the uselessness of these applications of AI, the ridiculous power requirements, when we are near a climate tipping point from all the ways we are heating up the planet, are just obscene. (Ditto for crypto, another technology we don’t need.)

@DrDaveT:..Ilini…

The test asked for 5 letter words so I tried to oblige.

I thought for sure that I’d get that past everyone.

Next you’l be telling me it’s Kentucki with a “y”!

A major concern I have is the widespread belief that computers don’t make mistakes. Ergo, whatever an LLM comes up with must be correct.

To begin with, computers do make mistakes. Errors in software cause erroneous answers. Edge cases, unforeseen circumstances, and so on cause errors. So do malfunctions (remember the blue screed of death?). Or simply entering the wrong command can prove devastating to a document (this happens to me often).

The second part is computers don’t think. So LLM outputs seen as reasoning quite simply are not. They’re either search results (not always right), or early drafts of something. Not to mention they can be steered towards particular answers, and often extract sense from gibberish*.

But what is real and what people believe is real are different things.

*I sometimes feed them snippets of the rapist’s ravings, and they seem to fixate on buzzwords and whatever recognizable nouns they find, and articulate something coherent from that.

I think I’ve spotted a trend at YouTube. More people – actual humans – seem to be putting themselves up front on the thumbnails. This may of course be the algorithm recognizing that I cannot stand AI voiceovers – it’s tense waiting for the AI to say something idiotic. Like my earliest example of ‘King Louis Ex Vee Eye.’ AI VO is almost as creepy as AI faces.

@Michael Reynolds:

It might be a broader trend. I think “creators” make the thumbnail(s). I have noticed some channels I follow placing the presenter’s face on thumbnails when they didn’t used to before.

According to Sam Altman, the CEO of OpenAI, people saying “please” and “thank you” costs the company tens of millions of dollars in electricity.

Anyway, I’m sure that generative AI doctors will be diagnosing people by the end of the Trump administration. I don’t think they will get any better, I just think that if people are investing that much into AI, they’re going to find a use case where it might make a profit and force it in, whether we like it or not.

@Gustopher:

First Copilot and then ChatGPT started asking follow up questions after providing answers. IMO, this is to elicit more data from users to train the models, because it’s the human tendency to answer questions. this includes telling the AI things like “thanks. that’s all for now.”

@Gustopher: As it turns out, a previous generation of AI models (aka statistical models) have been diagnosing disease, and doing a fair bit better than human doctors. They are very good at looking at radiology/ultrasound images and spotting things as well. However, nobody has put them in charge.

AI (called “engines”) are better at playing chess than humans. Humans learn from them, but also sometimes ignore their advice as “not human”. I’m sure not everybody draws this line in the same place, but everybody draws that line somewhere.

What is now being called “AI” is a very specific form – a Large Language Model. It is very good at predicting what the next word will be if the person answering is the generic person on the internet. It doesn’t “know” anything at all. It is a precocious child who can repeat back a lot of what she heard (I had one in my house) without understanding much, if any, of it.

@Jay L Gischer: IIRC Brad Delong described LLMs as Myna birds with a huge memory.

@gVOR10: Myna bird is probably a better metaphor than precocious child.

Lets be real here what people are calling AI isn’t really AI. It’s more like VI (Virtual Intelligence) at best.

@Mister Bluster: I always spelled it Illini.

At least they didn’t get blown out by Kentucky.

@Kathy: Computers don’t make mistakes but boy howdy their programmers sure do.

Well I mean excluding things like the old pentium bug and other hardware based errors….

@Kingdaddy:

I read The Mysterious Mr. Nakamoto recently. That crowd works hard to embrace their myopia.

@gVOR10:

“Brad Delong described LLMs as Myna birds with a huge memory.”

If you feed it bad data, is it contributing the the delinquency of a myna?

@Moosebreath: click

@Matt:

Thank you for finding the phrase I’ve yet to form. Well done.

How about “Illuminati”? Or “Illini”? I know the latter is a Native American word, but it’s been adopted into English, hasn’t it?

@Don: I was specfically curious about 5-letter words that fit the pattern.